Cold starts slow down serverless apps, frustrating users with delays. Here's how to fix it:

What is a Cold Start? It’s the delay when a serverless function starts for the first time or after inactivity. This happens because the platform needs to allocate resources, load code, and set up the environment.

Why it Matters: Delays over 300ms are noticeable, and over 1 second can lead to user abandonment.

How to Optimize:

Simplify code and reduce dependencies.

Keep functions "warm" during peak times.

Balance performance and cost by adjusting resources.

Key Metrics to Track: Aim for initialization times under 200ms and total response times under 800ms.

Quick Tip: Use tools like Movestax to monitor performance, manage resources, and automate function warming for better results.

Want to dive deeper? Read on for actionable strategies, tools, and advanced techniques to minimize cold starts and improve serverless performance.

Measuring Cold Start Times

Monitoring Tools

Monitoring tools help track cold start times effectively. Key features to look for include:

Request tracing: Follow the function's lifecycle from start to finish.

Timing breakdowns: Understand the different phases of initialization.

Resource utilization: Keep an eye on memory and CPU usage during startup.

These insights help establish precise performance thresholds for your applications.

Performance Metrics

Focus on these key metrics to evaluate cold start performance:

Metric | Description | Target Range |

|---|---|---|

Initialization Time | Time to load dependencies and set up | < 200ms |

First Byte Time | Time until the first response byte | < 300ms |

Total Response Time | Overall processing time | < 800ms |

Memory Usage | RAM used during startup | < 256MB |

Dependency Load Time | Time spent importing external modules | < 100ms |

These metrics provide a clear framework for setting and achieving performance goals.

Setting Performance Targets

Here’s a breakdown of cold start time targets for different use cases:

API Endpoints

Container initialization: 200–300ms

Code loading: 100–150ms

Dependency resolution: 150–200ms

Application startup: 150–200ms

Web Applications

Static asset loading: 300–400ms

Database connections: 200–300ms

Cache warming: 150–200ms

Template compilation: 100–150ms

Background Jobs

Queue processing setup: 400–500ms

Worker initialization: 300–400ms

Resource allocation: 200–300ms

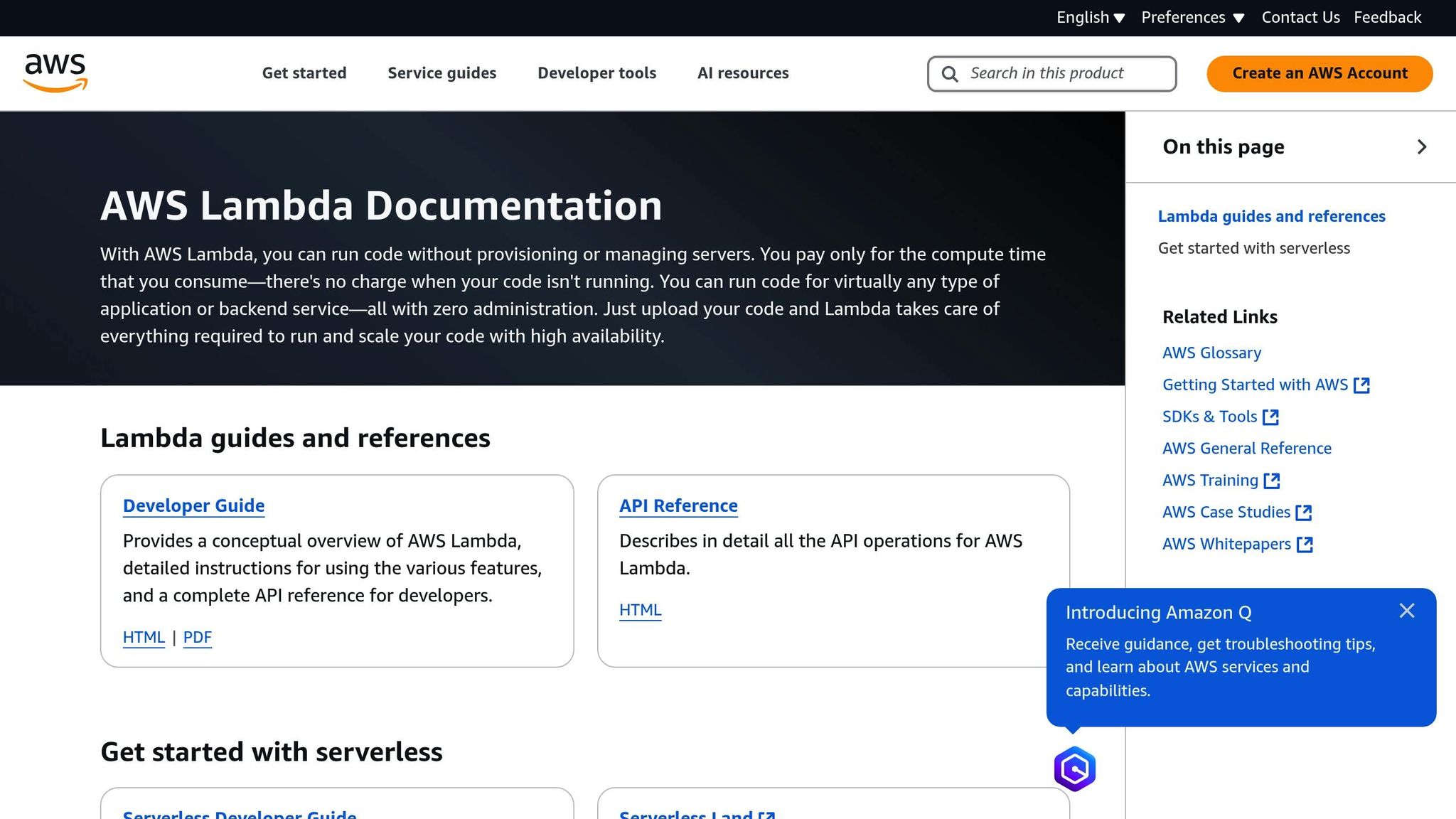

AWS Lambda: TOP 5 cold start mistakes

Code Optimization Methods

Improving your code goes hand-in-hand with platform settings and architectural choices to cut down on cold start delays.

Minimizing Dependencies

Load only the necessary code during startup by reducing dependencies. Use strategies like:

Importing specific functions instead of entire libraries

Favoring ES modules over CommonJS

Setting up bundlers (like webpack or rollup) to remove unused code

Choose smaller, efficient external packages to keep your codebase lightweight. These practices make your code more efficient and prepare it for further optimization.

Platform Settings and Tools

Fine-tuning platform settings helps reduce cold start delays in serverless applications.

Resource Settings

Allocating more memory can increase CPU power and speed up initialization. Here’s how to optimize:

Match memory allocation to the complexity of your functions.

Keep an eye on CPU usage to maintain a good performance balance.

Set timeouts that align with expected execution times.

Movestax provides an intuitive interface to adjust these settings for specific needs. Once resource settings are dialed in, managing concurrency becomes the next priority.

Concurrency Management

Manage concurrency by setting limits, tracking usage trends, and tweaking scaling parameters as necessary. Movestax continues to refine its serverless functions with improved options for handling concurrency.

With concurrency under control, you can focus on keeping functions ready with warming techniques.

Function Warming

Function warming ensures serverless functions stay responsive by reducing cold starts. Here are three key approaches:

Scheduled Warming

Schedule periodic pings, use timer-based triggers, or warm functions during peak usage times.

Pre-emptive Scaling

Analyze traffic patterns, scale resources in advance, and maintain a minimum number of active instances.

Smart Routing

Direct requests to already warm instances, queue initial requests, and distribute load efficiently.

Movestax incorporates monitoring and automation tools to streamline these processes, ensuring better serverless performance with ongoing updates.

Advanced Optimization

Building on earlier code and platform tweaks, these advanced strategies help reduce cold start delays even further.

Event-Driven Processing

Event-driven systems help cut cold start delays by separating components and allowing them to interact asynchronously. Here are some ways to implement this:

Use message queues to handle tasks asynchronously

Add retry mechanisms for failed operations

Set up dead letter queues to handle errors effectively

Configure queue-based auto-scaling to match workload demands

Movestax integrates RabbitMQ to make event-driven processing easier, giving developers the tools to apply these methods without hassle.

Cache Implementation

Using Redis-based caching on Movestax brings three major improvements:

Connection Pooling: Keep connections ready to use instead of initializing them repeatedly

Dependency Caching: Store pre-compiled modules and configuration data for quick access

Response Caching: Save results of frequently run queries to avoid redundant processing

Architecture Patterns

Adopting modern architecture patterns can noticeably reduce cold start times. For example:

Edge Computing: Deploy functions closer to users to cut down on latency

Microservices: Break large functions into smaller, more manageable pieces to speed up initialization and optimize resource use

Movestax's managed services simplify these approaches with easy deployment options and infrastructure management. These methods work alongside earlier optimizations to further minimize latency in your serverless functions.

Movestax Features

Movestax takes cold start optimization to the next level by incorporating specialized tools designed to minimize delays and improve efficiency.

Platform Overview

Movestax is built with a serverless-first approach, offering tools that reduce cold start delays and simplify app management:

Managed Databases: Seamless integration with PostgreSQL, MongoDB, and Redis for efficient data handling.

Instant Deployment: A streamlined process to make deployments faster and easier.

RabbitMQ Integration: Built-in support for message queues.

n8n Workflow Engine: Hosted workflows for automating tasks effortlessly.

These tools work alongside established methods like code optimization and resource tuning. The platform’s unified interface ensures smooth management of apps, databases, and workflows. Let’s dive into how its monitoring tools improve performance tracking.

Monitoring Tools

Movestax includes monitoring tools that provide actionable data to fine-tune performance:

Feature | Function | Benefit |

|---|---|---|

Performance Metrics | Tracks function initialization times | Helps pinpoint areas for improvement |

Resource Usage | Monitors memory and CPU consumption | Improves resource allocation |

Advanced Analytics | Examines execution patterns | Enables data-driven adjustments |

These tools help identify bottlenecks and uncover opportunities for performance improvements.

New Features

Movestax is introducing updates to further enhance cold start performance:

Serverless Functions: A new runtime designed to reduce initialization time.

Built-in Authentication: Integrated services for handling user authentication.

Object Storage Integration: Faster and more efficient data access.

API Marketplace: Pre-built components ready for use.

Additionally, an AI assistant offers natural language guidance on managing cold starts, making performance optimization even more straightforward.

Conclusion

Key Points

Improving cold start performance involves focusing on critical areas. Fine-tune your code and keep a close eye on performance metrics. Regular monitoring ensures faster and more efficient optimization.

Implementation Steps

Turn these insights into practical actions:

Establish Performance Baseline

Regularly measure cold start times

Set clear goals for performance and document delays and resource usage

Optimize Infrastructure

Use a serverless-first approach for easier management

Adjust resource settings for efficiency

Automate workflows where possible

Monitor and Adjust

Keep tracking performance metrics

Review resource usage patterns

Use collected data to refine and improve performance

Looking Forward

As serverless computing continues to advance, staying on top of optimizations is essential. Combining these methods with platform improvements ensures better performance. Tools like Movestax simplify the process by offering features for deployment, monitoring, and automation. Make it a habit to review performance and tweak strategies as needed. Movestax’s all-in-one tools can make your next deployment much smoother.